Linux ZynqMP PS-PCIe Root Port Driver

Linux ZynqMP PS-PCIe Root Port Driver

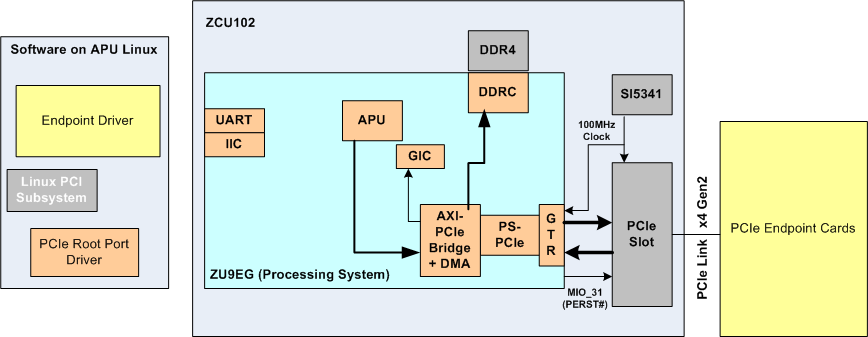

This page gives an overview of Root Port driver for the controller for PCI Express, which is available as part of the ZynqMP processing system.

Table of Contents

Controller for PCI Express

PCI Express (abbreviated as PCIe) is the newest bus standard designed to replace the old PCI/PCI-X and AGP standards. PCIe is used in servers, consumer, and industrial applications either as a motherboard-level interconnection to link peripherals or as an expansion card interface for add on boards. The Zynq® UltraScale+™ MPSoC provides a controller for the integrated block for PCI Express® v2.1 compliant, AXI-PCIe bridge, and DMA modules. The AXI-PCIe bridge provides high-performance bridging between PCIe and AXI.

The controller for PCIe supports both Endpoint and Root Port modes of operations and provides support up to x4 Gen2 links.For more information about controller for PCI Express, please refer Zynq UltraScale+ MPSoC TRM (UG1085).

Hardware Setup

The details here are targeted to ZCU102 hardware platform.

PetaLinux ZCU102 BSP provides x2 Gen2 FSBL by default. For other link configurations, appropriate FSBL should be generated via PCW in Vivado.

Tested End Point cards:

1. Broadcom PCIe NIC card

2. Realtek NIC card

3. KC705, KCU105, VCU108 with PIO designs (Xilinx PCIe Endpoint Example designs)

4. Intel NVMe SSD

5. Intel NIC card

6. PCIe-SATA

7. PCIe-USB

8. PLX Switch with Endpoint

Root Port Driver Configuration

The PCI/PCIe subsystem support and Root Port driver is enabled by default in ZynqMP kernel configuration. The related code is always built with the kernel. So, the user does not need to change anything in the configuration files to bring in PCIe support into ZynqMP kernel. The driver is built with Message Signaled Interrupts (MSI) support in default configuration.

The driver is available at:

https://github.com/Xilinx/linux-xlnx/blob/master/drivers/pci/controller/pcie-xilinx-nwl.c

End Point Driver Configuration

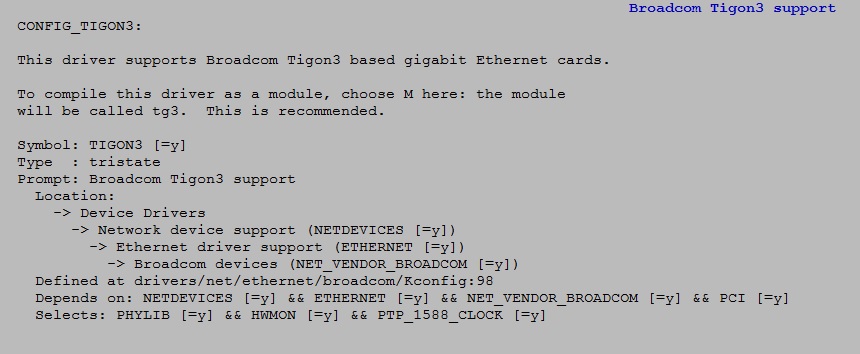

This page demonstrates the Root Port driver using Broadcom NIC Endpoint, for which NIC driver should be enabled in kernel as shown,

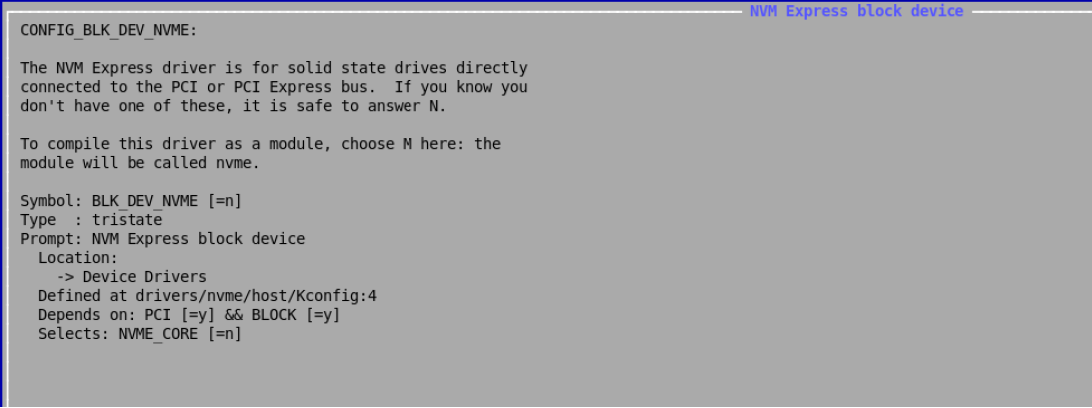

The following driver is needed to be enabled for using NVMe SSD as EndPoint,

For other End point cards, ensure the respective driver is loaded into kernel.

Rootfs Configuration

Enable lspci utility in rootfs under "Filesystem Packages->console/utils->pciutils->libpci3/pciutils/pciutils-ids/pciutils-lic"

Device Tree binding

The device tree node for ZynqMP PCIe core will be automatically generated, if the core is configured in the HW design, using the Device Tree BSP.

Steps to generate device-tree is documented here,

http://www.wiki.xilinx.com/Build+Device+Tree+Blob

And a sample binding is shown below and the description of DT property is documented here

nwl_pcie: pcie@fd0e0000 {

#address-cells = <3>;

#size-cells = <2>;

compatible = "xlnx,nwl-pcie-2.11";

#interrupt-cells = <1>;

msi-controller;

device_type = "pci";

interrupt-parent = <&gic>;

interrupts = <0 114 4>, <0 115 4>, <0 116 4>, <0 117 4>, <0 118 4>;

interrupt-names = "msi0", "msi1", "intx", "dummy", "misc";

interrupt-map-mask = <0x0 0x0 0x0 0x7>;

interrupt-map = <0x0 0x0 0x0 0x1 &pcie_intc 0x1>,

<0x0 0x0 0x0 0x2 &pcie_intc 0x2>,

<0x0 0x0 0x0 0x3 &pcie_intc 0x3>,

<0x0 0x0 0x0 0x4 &pcie_intc 0x4>;

clocks = <&clkc 23>

msi-parent = <&nwl_pcie>;

reg = <0x0 0xfd0e0000 0x0 0x1000>,

<0x0 0xfd480000 0x0 0x1000>,

<0x80 0x00000000 0x0 0x1000000>;

reg-names = "breg", "pcireg", "cfg";

ranges = <0x02000000 0x00000000 0xe0000000 0x00000000 0xe0000000 0x00000000 0x10000000 /* non-prefetchable memory */

0x43000000 0x00000006 0x00000000 0x00000006 0x00000000 0x00000002 0x00000000>;/* prefetchable memory */

pcie_intc: legacy-interrupt-controller {

interrupt-controller;

#address-cells = <0>;

#interrupt-cells = <1>;

};

};

Test Procedure

- Load the linux onto ZCU102.

- After successful booting of the linux, will be able to see the Broadcom NIC endpoint driver is been probed. Run the command ‘lspci’ from the user prompt, which shows the the device id and manufacturer id of the Broadcom NIC card. This ensures the enumeration of the device is fine.

- The Broadcom NIC is shown up as an eth1 interface in the linux. Run the command ‘ifconfig eth1 up’ which brings up the Ethernet interface. This step ensures all the memory transactions on the PCIe bus are working.

- Assign a IP address either static/dhcp.

- Ping to a device of known IP address.

- With MSI supported linux image, it can be observed that increase in MSI interrupts count (cat /proc/interrupts).

Kernel Console Output

Driver Initialization for ZynqMP

nwl-pcie fd0e0000.pcie: Link is UP PCI host bridge /amba/pcie@fd0e0000 ranges: No bus range found for /amba/pcie@fd0e0000, using [bus 00-ff] MEM 0xe1000000..0xefffffff -> 0xe1000000 nwl-pcie fd0e0000.pcie: PCI host bridge to bus 0000:00 pci_bus 0000:00: root bus resource [bus 00-ff] pci_bus 0000:00: root bus resource [mem 0xe1000000-0xefffffff] pci 0000:00:00.0: BAR 8: assigned [mem 0xe1000000-0xe10fffff] pci 0000:01:00.0: BAR 0: assigned [mem 0xe1000000-0xe100ffff 64bit] pci 0000:01:00.0: BAR 6: assigned [mem 0xe1010000-0xe101ffff pref] pci 0000:00:00.0: PCI bridge to [bus 01-0c] pci 0000:00:00.0: bridge window [mem 0xe1000000-0xe10fffff] xilinx-dpdma fd4c0000.dma: Xilinx DPDMA engine is probed xenfs: not registering filesystem on non-xen platform Serial: 8250/16550 driver, 4 ports, IRQ sharing disabled ff000000.serial: ttyPS0 at MMIO 0xff000000 (irq = 217, base_baud = 6250000) is a xuartps console [ttyPS0] enabled console [ttyPS0] enabled bootconsole [uart0] disabled bootconsole [uart0] disabled ff010000.serial: ttyPS1 at MMIO 0xff010000 (irq = 218, base_baud = 6250000) is a xuartps Unable to detect cache hierarchy from DT for CPU 0 brd: module loaded loop: module loaded ahci-ceva fd0c0000.ahci: AHCI 0001.0301 32 slots 2 ports 6 Gbps 0x3 impl platform mode ahci-ceva fd0c0000.ahci: flags: 64bit ncq sntf pm clo only pmp fbs pio slum part ccc sds apst scsi host0: ahci-ceva scsi host1: ahci-ceva ata1: SATA max UDMA/133 mmio [mem 0xfd0c0000-0xfd0c1fff] port 0x100 irq 214 ata2: SATA max UDMA/133 mmio [mem 0xfd0c0000-0xfd0c1fff] port 0x180 irq 214 mtdoops: mtd device (mtddev=name/number) must be supplied m25p80 spi0.0: found n25q512a, expected m25p80 m25p80 spi0.0: Controller not in SPI_TX_QUAD mode, just use extended SPI mode m25p80 spi0.0: n25q512a (131072 Kbytes) 8 ofpart partitions found on MTD device spi0.0 Creating 8 MTD partitions on "spi0.0": 0x000000000000-0x000000800000 : "boot" 0x000000800000-0x000000840000 : "bootenv" 0x000000840000-0x000001240000 : "kernel" 0x000001240000-0x000008000000 : "spare" 0x000000000000-0x000000100000 : "qspi-fsbl-uboot" 0x000000100000-0x000000600000 : "qspi-linux" 0x000000600000-0x000000620000 : "qspi-device-tree" 0x000000620000-0x000000c00000 : "qspi-rootfs" libphy: Fixed MDIO Bus: probed CAN device driver interface libphy: MACB_mii_bus: probed macb ff0e0000.ethernet eth0: Cadence GEM rev 0x50070106 at 0xff0e0000 irq 29 (00:0a:35:00:22:01) macb ff0e0000.ethernet eth0: attached PHY driver [Generic PHY] (mii_bus:phy_addr=ff0e0000.etherne:0c, irq=-1) tg3.c:v3.137 (May 11, 2014) pci 0000:00:00.0: enabling device (0000 -> 0002)

Broadcom NIC card probing

tg3 0000:01:00.0: enabling device (0000 -> 0002) tg3 0000:01:00.0 eth1: Tigon3 [partno(BCM95751A519FLP) rev 4201] (PCI Express) MAC address 00:10:18:32:d2:a9 tg3 0000:01:00.0 eth1: attached PHY is 5750 (10/100/1000Base-T Ethernet) (WireSpeed[1], EEE[0]) tg3 0000:01:00.0 eth1: RXcsums[1] LinkChgREG[0] MIirq[0] ASF[0] TSOcap[1] tg3 0000:01:00.0 eth1: dma_rwctrl[76180000] dma_mask[64-bit]

lspci output

root@Xilinx: lspci 00:00.0 PCI bridge: Xilinx Corporation Device d022 01:00.0 Ethernet controller: Broadcom Corporation NetXtreme BCM5751 Gigabit Ethernet PCI Express (rev 21)

Ethernet Interface

root@Xilinx: ifconfig -a eth1

eth1 Link encap:Ethernet HWaddr 00:10:18:32:D2:A9

BROADCAST MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

Interrupt:222

Testing of Ethernet interface

root@Xilinx: ifconfig eth1 192.168.1.2 root@Xilinx: IPv6: ADDRCONF(NETDEV_UP): eth1: link is not ready tg3 0000:01:00.0 eth1: Link is up at 1000 Mbps, full duplex tg3 0000:01:00.0 eth1: Flow control is on for TX and on for RX IPv6: ADDRCONF(NETDEV_CHANGE): eth1: link becomes ready root@Xilinx: root@Xilinx: ping 192.168.1.4 PING 192.168.1.4 (192.168.1.4): 56 data bytes 64 bytes from 192.168.1.4: seq=0 ttl=128 time=0.794 ms 64 bytes from 192.168.1.4: seq=1 ttl=128 time=0.655 ms 64 bytes from 192.168.1.4: seq=2 ttl=128 time=0.670 ms 64 bytes from 192.168.1.4: seq=3 ttl=128 time=0.354 ms 64 bytes from 192.168.1.4: seq=4 ttl=128 time=0.918 ms 64 bytes from 192.168.1.4: seq=5 ttl=128 time=0.724 ms 64 bytes from 192.168.1.4: seq=6 ttl=128 time=0.719 ms 64 bytes from 192.168.1.4: seq=7 ttl=128 time=0.712 ms --- 192.168.1.4 ping statistics --- 27 packets transmitted, 27 packets received, 0% packet loss round-trip min/avg/max = 0.354/0.652/0.918 ms root@Xilinx:

MSI Interrupts

root@Xilinx-ZCU102-2016_3:~# cat /proc/interrupts

CPU0 CPU1 CPU2 CPU3

1: 0 0 0 0 GICv2 29 Edge arch_timer

2: 9636 8431 7931 9452 GICv2 30 Edge arch_timer

12: 0 0 0 0 GICv2 156 Level zynqmp-dma

13: 0 0 0 0 GICv2 157 Level zynqmp-dma

14: 0 0 0 0 GICv2 158 Level zynqmp-dma

15: 0 0 0 0 GICv2 159 Level zynqmp-dma

16: 0 0 0 0 GICv2 160 Level zynqmp-dma

17: 0 0 0 0 GICv2 161 Level zynqmp-dma

18: 0 0 0 0 GICv2 162 Level zynqmp-dma

19: 0 0 0 0 GICv2 163 Level zynqmp-dma

20: 0 0 0 0 GICv2 164 Level Mali_GP_MMU, Mali_GP, Mali_PP0_MMU, Mali_PP0, Mali_PP1_MMU, Mali_PP1

30: 0 0 0 0 GICv2 95 Level eth0, eth0

206: 302 0 0 0 GICv2 49 Level cdns-i2c

207: 40 0 0 0 GICv2 50 Level cdns-i2c

209: 0 0 0 0 GICv2 150 Level nwl_pcie:misc

214: 15 0 0 0 GICv2 47 Level ff0f0000.spi

215: 0 0 0 0 GICv2 58 Level ffa60000.rtc

216: 0 0 0 0 GICv2 59 Level ffa60000.rtc

217: 0 0 0 0 GICv2 165 Level ahci-ceva[fd0c0000.ahci]

219: 0 0 0 0 GICv2 187 Level arm-smmu global fault

220: 882 0 0 0 GICv2 53 Level xuartps

223: 0 0 0 0 GICv2 154 Level fd4c0000.dma

225: 408 0 0 0 nwl_pcie:msi 524288 Edge eth1

226: 0 0 0 0 GICv2 97 Level xhci-hcd:usb1

IPI0: 1040 1287 627 1142 Rescheduling interrupts

IPI1: 59 46 56 80 Function call interrupts

IPI2: 0 0 0 0 CPU stop interrupts

IPI3: 0 0 0 0 Timer broadcast interrupts

IPI4: 0 0 0 0 IRQ work interrupts

IPI5: 0 0 0 0 CPU wake-up interrupts

Err: 0

AER services with PS PCIe as Root Port have not been tested yet.

Related Links

, multiple selections available,

© Copyright 2019 - 2022 Xilinx Inc. Privacy Policy