This page provides usage information and release notes for the xlnx-vai-lib-samples snap available from the Canonical snap store at http://snapcraft.io.

Table of Contents

Introduction

The xlnx-vai-lib-samples snap provides a set of pre-built Vitis AI sample applications that are available in source form in the Vitis-AI Github repository. Source code for the sample apps can be found at:

https://github.com/Xilinx/Vitis-AI/tree/v1.3.2/demo/Vitis-AI-Library/samples/

The current version supports Vitis-AI v1.3.2. The compatible models are v1.3.1.

The output applications from building each sample app in the samples directory produces a set of test_<test type>_<sample app> executables. Take, for example, the facedetect sample app. The build produces the following 4 applications:

test_jpeg_facedetect

test_video_facedetect

test_performance_facedetect

test_video_facedetect

The readme for this sample app indicates that the following models are compatible with this app:

densebox_320_320

densebox_640_360

The xlnx-vai-lib-samples app provides wrappers for these tests, implemented as “sub-applications”, which will check the supplied “sample app” and “model name” arguments, and confirm that they are a valid combination. Then, the snap will call the appropriate sub-application with the proper arguments such that the proper test-specific application is called. The snap will also download models on demand from the Xilinx model zoo and store them locally for future use.

The xlnx-vai-lib-samples app is compatible only with DPUs configurations compatible with the Model Zoo models associated with the zcu102/zcu104 (ex: densebox_320_320-zcu102_zcu104-r1.3.1.tar.gz). It is compatible with all three of the platforms provided by the Ubuntu 20.04 Certified Image for Xilinx ZCU10x Evaluation Boards.

Installation

sudo snap install xlnx-vai-lib-samples |

Since the consumer snap (xlnx-vai-lib-samples) "connects" to the producer snap (xlnx-config) to share information, it’s important for xlnx-config to be installed first.

Note: If you ran the xilinx-setup-env.sh script as instructed on the Getting Started page, xlnx-config should already be installed. You can confirm by running “snap list” from a terminal.

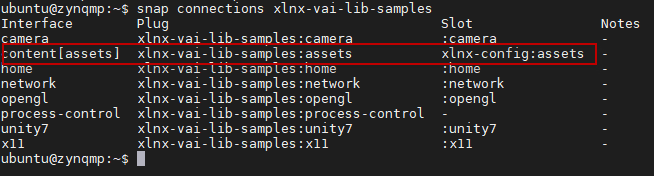

You can confirm the connection is made with the following command:

snap connections xlnx-vai-lib-samples

If for some reason you need to connect the snaps manually, the following command can be used:

sudo snap connect xlnx-vai-lib-samples:assets xlnx-config:assets

Usage

The xlnx-vai-lib-samples snap is made up of five sub-applications. Each sub-application can be executed by adding the sub-app name to xlnx-vail-lib-samples, separated by a “.” as show below:

xlnx-vai-lib-samples.info xlnx-vai-lib-samples.test-jpeg <sample app> <model name> <jpeg image> xlnx-vai-lib-samples.test-video <sample app <model name> <video device index> [-t <number of threads> ] xlnx-vai-lib-samples.test-accuracy <sample app> <model name> <input file with list of images> <results output file> xlnx-vai-lib-samples.test-performance <sample app> <model name> <input file with list of images> [-t <number of threads> -s <number of seconds> ]

For general snap usage information, system information, and available models and apps, run the following command:

xlnx-vai-lib-samples.info |

Vitis AI Library Sample Applications

Each test- sub application requires two common arguments that must be specified before any test specific arguments:

<sample app> - This select which of the sample applications types to run

<model name> - This option selections which model to use

For a list of all support sample applications and models, please see the table below.

Unless noted, each application includes the following four tests. At this time, for sample applications that provide source code for more specialized tests, you must build and run the samples from source.

App Name | Description | Test Specific Arguments | Output |

|---|---|---|---|

test-jpeg | Provides a single image as an input to the model | <input_file>.jpg | input_file_result.jpg - depending on the app, this could include:

|

test-video | Uses live USB video stream as input to the model | USB camera video device index X (/dev/videoX) | X-window Video - depending on the app, this could include:

|

test-performance | Tests the performance of the model | <input file with list of images> | FPS, E2E_MEAN, & DPU_MEAN |

test-accuracy | Test the accuracy of the model | <input file with list of images> | Results output file |

Vitis AI provides a test image archive that can be download to the target and used to run the tests above. To download the sample image package, and extract them to the samples directory in your home directory, use the following commands:

wget https://www.xilinx.com/bin/public/openDownload?filename=vitis_ai_library_r1.3.1_images.tar.gz -O ~/vitis_ai_library_r1.3.1_images.tar.gz tar -xzf vitis_ai_library_r1.3.1_images.tar.gz |

To use these images and file lists, change into the subdirectory of the test you want to run, and execute the test app from there. For example to use the facedetect sample images, you should run your test from the ~/samples/facedetect directory.

Running a Sample App

To run one of the samples, the usage is as follows. The list of available options for "sample name" and "model names" can be found in the table below.

Usage: xlnx-vai-lib-samples.<test name> <sample name> <model name> <test specific arguments> |

For example, to run the test-jpeg test, with the facedetect sample app, the densebox_320_320 model, and the input file sample_facedetect.jpg the command would be as follows:

xlnx-vai-lib-samples.test-jpeg facedetect densebox_320_320 sample_facedetect.jpg |

To run the test-video app, with the openpose sample app, the openpose_pruned_0_3 model, and the USB camera at /dev/video2 as the input, use the following command:

xlnx-vai-lib-samples.test-video openpose openpose_pruned_0_3 2 |

To run the test-video app over SSH with X forwarding, set the XAUTHORITY variable before running the test.

export XAUTHORITY=$HOME/.Xauthority |

For help determine with /dev/videoX interface to use, you can use the following command

v4l2-ctl --list-devices |

Available Sample Applications and Models

The following sample applications and models are available in the current release:

Release Notes

Date | Snap Revision | Snap Version | Notes |

|---|---|---|---|

8/11/21 | 8 | 1.3.2 | Initial Public Release |

9/22/21 | 9 | 1.3.2 | Package refresh |